Nvidia’s RTX 3090 Ti, the supercharged Ampere flagship which recently went on sale, has been the subject of an interesting experiment to tame its power consumption – with the result that even when it’s seriously cut back by a third in terms of the wattage supplied, Team Green’s GPU still outdoes AMD’s current flagship.

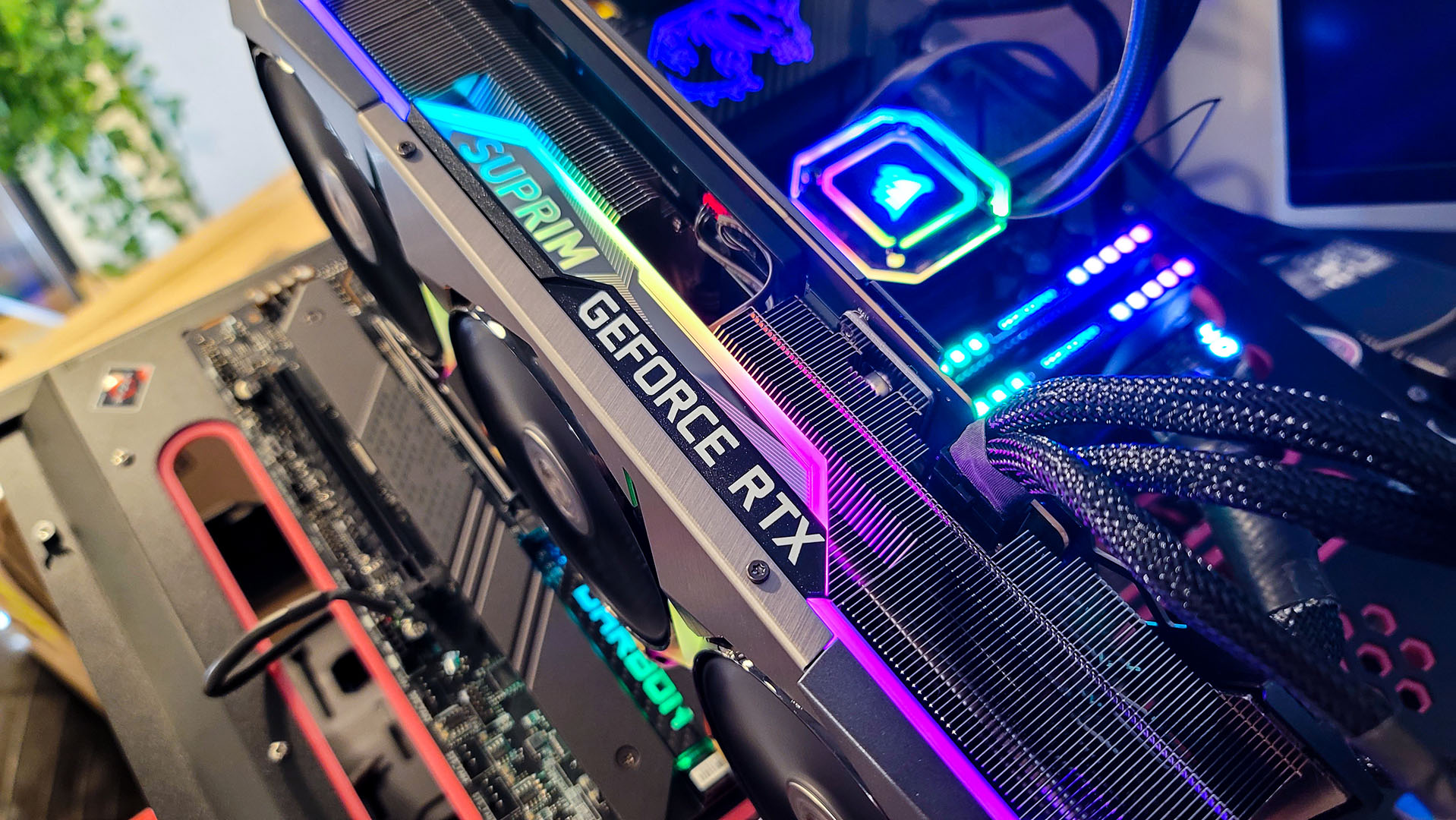

This benchmarking comes from Igor’s Lab which used an MSI GeForce RTX 3090 Ti Suprim X, and reduced the power usage of the graphics card to 300W (when normally this GPU pulls 450W – though technically in this testing, the 3090 Ti was drawing 465W as measured by Igor, and 313W when slowed down).

Running a range of 10 games at 4K resolution and taking average frame rates, Igor found that the default (450W) RTX 3090 Ti averaged 107 frames per second (fps), whereas at 300W, it wasn’t all that much slower at 96 fps.

Granted, that’s still a performance gap – of just over 10%, so it’s appreciable – but hardly a huge gulf.

Tellingly, Igor also benchmarked some AMD graphics cards as part of this experiment and found that the RX 6900 XT, Team Red’s current flagship, was slower than the 300W limited 3090 Ti, hitting 92 fps on average.

But the really interesting bit here is that the RX 6900 XT was chugging 360W to achieve that performance, so around 15% more power than the artificially constrained 3090 Ti (at 313W).

Analysis: Some striking discoveries – and hope for Nvidia’s next-gen?

This illustrates a few things, the most striking of which is that the RTX 3090 Ti can actually be pretty efficient – more so than AMD’s current flagship – when it’s reined in.

The other equally striking element here is that when the 3090 Ti is running at full power it becomes so much more inefficient – consider that the power usage is going up almost 50% to get a 10% performance boost versus the 3090. In other words, to claim the frame rate king title and produce a new flagship Ti variant which beats the old 3090 by an appreciable amount to look like a worthwhile successor, Nvidia has had to ramp up power consumption massively.

As for cutting the power draw as Igor has done here, of course in practical terms it makes little sense to do this on the face of it. Why fork out such a lot of cash (the 3090 Ti start at $1,999, or around £1,550 / AU$2,700) for the fastest card on the market only to artificially limit its performance. That’s just not what people are going to do – a gamer buyer the 3090 Ti is likely doing so for bragging rights as much as anything, and showing off those frame rates (not how they’ve maximized efficiency).

Still, as our sister site PC Gamer, which spotted this story, points out, there could be an argument for reining in the power consumption of this graphics card somewhat, if you tweaked down a more conservative 5% frame rate drop. That’ll likely still save you a considerable amount of wattage, and maybe help keep your PC – and the room it’s in – cooler in a hot summer, say, without a particularly noticeable difference in terms of performance. Rising energy costs and climate-related issues could also be considerations, too.

All that said, the real-world application of taming the 3090 Ti is still on the flimsy side, but there’s something else interesting here pertaining to Nvidia’s next-gen products. All we’ve been hearing lately is how power-hungry RTX 4000 models (or whatever they’ll be called) could be, and the danger that even away from the flagship, Lovelace graphics cards might make big demands on your PSU.

We’ve heard chatter that the RTX 4080 might use the same amount of power as the 3090 Ti (450W) for example, so the fear is that even the 4070 could be problematic for some PCs with somewhat more modest power supplies.

AMD, on the other hand, is expected to do much better on the efficiency front with next-gen RDNA 3 – but maybe seeing how the tamed 3090 Ti performs versus the RX 6900 XT gives us some hope that Nvidia won’t be as badly outmatched in efficiency terms as the rumor mill seems to believe.

Or at the very least, if Team Green’s relative performance is better than AMD – some rumors have suggested massive performance gains, which would certainly explain the major power demands – then gamers may theoretically have the headroom to be able to notch down their Lovelace graphics card a bit in order to get a more efficient and (relatively) economical level of power draw.

Nvidia’s RTX 3090 Ti is incredibly powerful – but is it remotely worth all that money?